Tutorial based on https://blog.alexellis.io/create-a-3-node-k3s-cluster-with-k3sup-digitalocean/ and converted for Google Cloud gcloud commands.

At the end is a curl | bash -s up all-in-one command if you want to do this again in future.

$ gcloud compute instances create k3s-1 \ --machine-type n1-standard-1 \

--tags k3s,k3s-master

Created [https://www.googleapis.com/.../zones/us-west1-a/instances/k3s-1].

$ gcloud compute instances create k3s-2 k3s-3 \

--machine-type n1-standard-1 \

--tags k3s,k3s-worker

Created [https://www.googleapis.com/.../zones/us-west1-a/instances/k3s-2].

Created [https://www.googleapis.com/.../zones/us-west1-a/instances/k3s-3].

NAME ZONE MACHINE_TYPE PREEMPTIBLE INTERNAL_IP EXTERNAL_IP STATUS

k3s-1 us-west1-a n1-standard-1 10.138.0.6 35.197.41.27 RUNNING

k3s-2 us-west1-a n1-standard-1 10.138.0.7 34.82.211.122 RUNNING

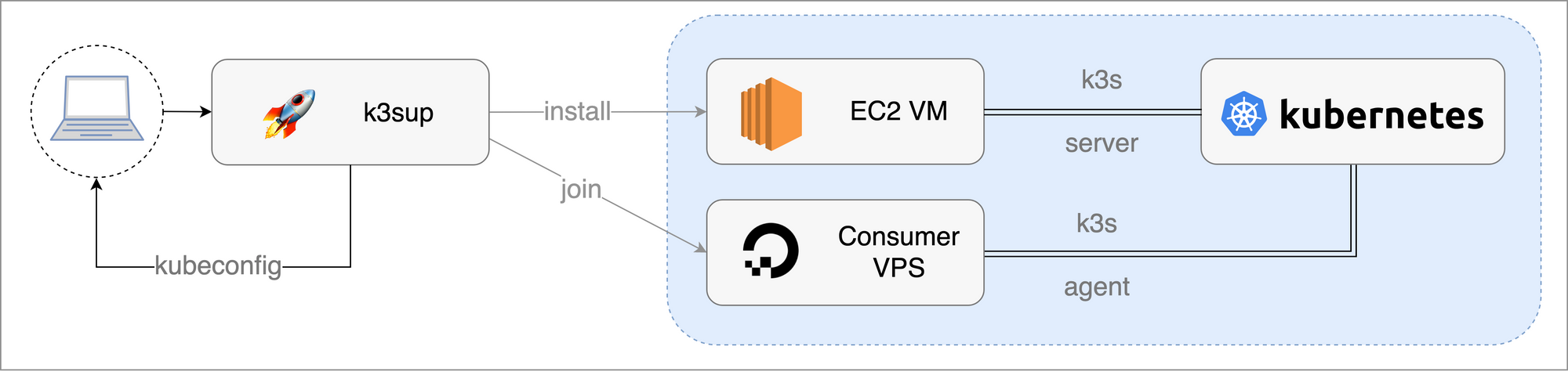

k3s-3 us-west1-a n1-standard-1 10.138.0.10 34.83.131.131 RUNNINGFrom Alex’s blog, here is a conceptual diagram of what we are about to do, except with Google Cloud VMs… His blog post was about Digital Ocean, so I assume this diagram has been around a few blog posts.

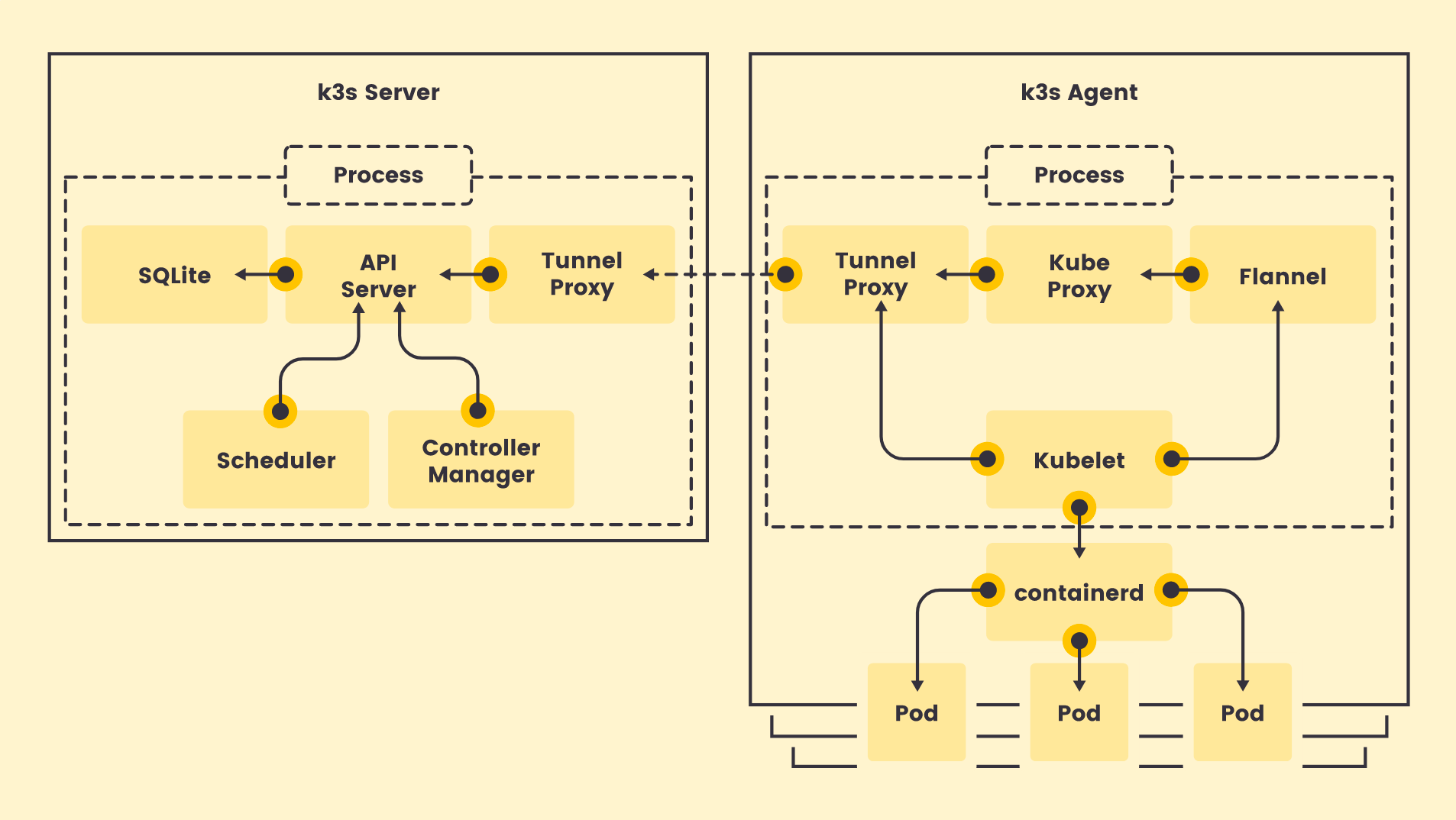

From the k3s website we can see the internal components being run on the primary install and secondary agent join VMS:

Alex’s k3sup tool uses SSH to access and install k3s within each VM. We can use gcloud to setup the private key we need for SSH access.

gcloud compute config-sshThis creates ~/.ssh/google_compute_known_hosts file and also some hostname entries in ~/.ssh/config that we can ignore for now.

primary_server_ip=35.197.41.27

k3sup install --ip $primary_server_ip --context k3s --ssh-key ~/.ssh/google_compute_engine --user $(whoami)We can SSH into the VM and confirm it is full of running processes that look like Kubernetes. Eventually you will see something like:

$ gcloud compute ssh k3s-1

[email protected]:~$ ps axwf

...

925 ? Ssl 0:15 /usr/local/bin/k3s server --tls-san 35.197.41.27

958 ? Sl 0:03 \_ containerd -c /var/lib/rancher/k3s/agent/etc/containerd/config.toml -a /run/k3s/containerd/containerd.sock --state /run/k3s/con

1241 ? Sl 0:00 \_ containerd-shim -namespace k8s.io -workdir /var/lib/rancher/k3s/agent/containerd/io.containerd.runtime.v1.linux/k8s.io/197a

1259 ? Ss 0:00 | \_ /pause

1340 ? Sl 0:00 \_ containerd-shim -namespace k8s.io -workdir /var/lib/rancher/k3s/agent/containerd/io.containerd.runtime.v1.linux/k8s.io/3442

1358 ? Ssl 0:00 | \_ /coredns -conf /etc/coredns/Corefile

1627 ? Sl 0:00 \_ containerd-shim -namespace k8s.io -workdir /var/lib/rancher/k3s/agent/containerd/io.containerd.runtime.v1.linux/k8s.io/773e

1655 ? Ss 0:00 | \_ /pause

1728 ? Sl 0:00 \_ containerd-shim -namespace k8s.io -workdir /var/lib/rancher/k3s/agent/containerd/io.containerd.runtime.v1.linux/k8s.io/78a2

1746 ? Ss 0:00 | \_ /pause

1909 ? Sl 0:00 \_ containerd-shim -namespace k8s.io -workdir /var/lib/rancher/k3s/agent/containerd/io.containerd.runtime.v1.linux/k8s.io/07f2

1930 ? Ss 0:00 | \_ /bin/sh /usr/bin/entry

1962 ? Sl 0:00 \_ containerd-shim -namespace k8s.io -workdir /var/lib/rancher/k3s/agent/containerd/io.containerd.runtime.v1.linux/k8s.io/7e55

1980 ? Ss 0:00 | \_ /bin/sh /usr/bin/entry

2010 ? Sl 0:00 \_ containerd-shim -namespace k8s.io -workdir /var/lib/rancher/k3s/agent/containerd/io.containerd.runtime.v1.linux/k8s.io/708e

2027 ? Ss 0:00 | \_ /bin/sh /usr/bin/entry

2078 ? Sl 0:00 \_ containerd-shim -namespace k8s.io -workdir /var/lib/rancher/k3s/agent/containerd/io.containerd.runtime.v1.linux/k8s.io/ca0a

2095 ? Ssl 0:00 \_ /traefik --configfile=/config/traefik.toml

[email protected]:~$ exitTo access our (1-node) cluster we need to first open a port, to allow our kubectl requests in through port 6443:

$ gcloud compute firewall-rules create k3s --allow=tcp:6443 --target-tags=k3s

Creating firewall...⠏Created [https://www.googleapis.com/.../firewalls/k3s].

Creating firewall...done.

NAME NETWORK DIRECTION PRIORITY ALLOW DENY DISABLED

k3s default INGRESS 1000 tcp:6443 Falseexport KUBECONFIG=`pwd`/kubeconfig

kubectl get nodesThe output will show our nacent cluster coming into shape:

NAME STATUS ROLES AGE VERSION

k3s-1 Ready master 4m57s v1.15.4-k3s.1We can now setup k3s on the remaining nodes, via their external IPs 34.82.211.122 and 34.83.131.131 in our example above.

We use k3sup join instead of k3sup install to add nodes to our tiny cluster:

k3sup join --ip 34.82.211.122 --server-ip $primary_server_ip --ssh-key ~/.ssh/google_compute_engine --user $(whoami)

k3sup join --ip 34.83.131.131 --server-ip $primary_server_ip --ssh-key ~/.ssh/google_compute_engine --user $(whoami)

Or, if you have lots of worker nodes you can do them all with:

gcloud compute instances list \

--filter=tags.items=k3s-worker \

--format="get(networkInterfaces[0].accessConfigs.natIP)" | \

xargs -L1 k3sup join \

--server-ip $primary_server_ip \

--ssh-key ~/.ssh/google_compute_engine \

--user $(whoami) \

--ipThe resulting cluster is up and running:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k3s-3 Ready worker 4m24s v1.15.4-k3s.1

k3s-2 Ready worker 4m59s v1.15.4-k3s.1

k3s-1 Ready master 6m8s v1.15.4-k3s.1

$ kubectl describe node/k3s-1

...Cleanup

To clean up the three VMs and the firewall rules:

gcloud compute instances delete k3s-1 k3s-2 k3s-3 --delete-disks all

gcloud compute firewall-rules delete k3sIf you have lots of nodes you can delete them all:

gcloud compute instances list \

--filter=tags.items=k3s --format="get(name)" | \

xargs gcloud compute instances deleteAll in one command

I’ve packaged the create (up) and delete (down) instructions above into a curl | bash helper script via a Gist.

To bring up a 3-node cluster of k3s:

curl -sSL https://gist.githubusercontent.com/drnic/9c5f2d58865c8595fe3aa77672f3ebc8/raw/cf7b10072da392c07257ee33367f3daab2d484d7/k3sup-gce.sh | bash -s upTo tear it down:

curl -sSL https://gist.githubusercontent.com/drnic/9c5f2d58865c8595fe3aa77672f3ebc8/raw/cf7b10072da392c07257ee33367f3daab2d484d7/k3sup-gce.sh | bash -s down