No, I’m not crazy, and no I’m not trolling you! This is for real!

No longer single-platform or closed source, Microsoft’s PowerShell Core is now an open source, full-featured, cross-platform (MacOS, Linux, more) shell sporting some serious improvements over the venerable /bin/[bash|zsh] for those souls brave enough to use it as their daily driver.

I made the switch about six months ago and couldn’t be happier; it’s by far one of the best tooling/workflow decisions I’ve made in my multi-decade career. PowerShell’s consistent naming conventions, built-in documentation system, and object-oriented approach have made me more productive by far, and I’ve had almost zero challenges integrating it with my day-to-day workflow despite using a mix of both Linux and MacOS.

At a Glance

- Multi-Platform, Multiple Installation Options:

- Linux:

deb,rpm, AUR, or just unpack a tarball and run‘ - MacOS: Homebrew cask:

brew install powershell --cask, Intel x64 and arm64 available,.pkginstallers downloadable- Only available as a cask, and casks are unavailable on Linux, so I suggest other avenues for you linuxbrew folks out there.

- Linux:

- POSIX binaries work out of the box as expected

ps aux | grep -i someprocworks fine- No emulation involved whatsoever, no containers, no VMs, no “hax”

- Could be enhanced via recently-released Crescendo

- No trust? No problem! It’s fully open source!

- Killer feature: Real CLASSES, TYPES, OBJECTS, METHODS and PROPERTIES. No more string manipulation fragility!

Scripting is much easier and more pleasant with PowerShell because its syntax is very similar to many other scripting languages (unlike bash). PowerShell also wins out when it comes to naming conventions for built-in commands and statements. You can invoke old-school POSIX-only commands through PowerShell and they work just like before, with no changes; so things like ps aux or sudo vim /etc/hosts work out of the box without any change in your workflow at all.

I don’t have to worry about what version of bash or zsh is installed on the target operating system, nor am I worried about Apple changing that on me by sneaking it into a MacOS upgrade or dropping something entirely via a minor update.

Developer 1: Here’s a shell script for that work thing.

Developer 2: It doesn’t run on my computer

Developer 1: What version of

bashare you using?Developer 2: Whatever ships with my version of MacOS

Developer 1: Do

echo $BASH_VERSION, what’s that say?Developer 2: Uhh, says

3.2Developer 1: Dear god that’s old!

Developer 3: You guys wouldn’t have this problem with PowerShell Core

The biggest advantage PowerShell provides, by far, is that it doesn’t deal in mere simplistic strings alone, but in full-fledged classes and objects, with methods, properties, and data types. No more fragile grep|sed|awk nonsense! You won’t have to worry about breaking everything if you update the output of a PowerShell script! Try changing a /bin/sh script to output JSON by default and see what happens to your automation!

PowerShell works exactly as you would expect on Linux and MacOS, right out of the box. Invoking and running compiled POSIX binaries (e.g. ps|cat|vim|less, etc.) works exactly like it does with bash or zsh and you don’t have to change that part of your workflow whatsoever (which is good for those of us with muscle memory built over 20+ years!). You can set up command aliases, new shell functions, a personal profile (equivalent of ~/.bashrc), custom prompts and shortcuts – whatever you want! If you can do it with bash, you can do it BETTER with PowerShell.

Taken all together, the case for trying out modern PowerShell is incredibly strong. You’ll be shocked at how useful it is! The jolt it’ll give your productivity is downright electrifying and it can seriously amp up your quality of life!

Okay, okay, fine: I’ll stop with the electricity puns.I promise nothing.

Nothing wrong with bash/zsh

Let me get this out of the way: There’s nothing wrong with bash or zsh. They’re fine. They work, they work well, they’re fast as hell, and battle-tested beyond measure. I’m absolutely NOT saying they’re “bad” or that you’re “bad” for using them. I did too, for over 20 years! And I still do every time I hit [ENTER] after typing ssh [...]! They’ve been around forever, and they’re well respected for good reason.

PowerShell is simply different, based on a fundamentally more complex set of paradigms than the authors of bash or zsh could have imagined at the time those projects began. In fact, pwsh couldn’t exist in its current state without standing on the shoulders of giants like bash and zsh, so respect, here, is absolutely DUE.

That said, I stand by my admittedly-controversial opinion that PowerShell is just plain better in almost all cases. This post attempts to detail why I’m confident in that statement.

bash and zsh are Thomas Edison minus the evil: basic, safe, known, and respected, if a bit antiquated. PowerShell is like Nikola Tesla: a “foreigner” with a fundamentally unique perspective, providing a more advanced approach that’s far ahead of its time.

A Tale of Two PowerShells

You may see references to two flavors of PowerShell out there on the interweb: “Windows PowerShell” and “PowerShell Core”:

- “Windows” PowerShell typically refers to the legacy variant of PowerShell, version 5.1 or earlier, that indeed is Windows-only. It’s still the default PowerShell install on Windows 10/11 (as of this writing), but with no new development/releases for this variant since 2016, I don’t advise using it.

- PowerShell Core is what you want: cross-platform, open source, and as of this writing, at version 7.2.

Of the two, you want PowerShell Core, which refers to PowerShell version 6.0 or higher. Avoid all others.

For the remainder of this article, any references to “PowerShell” or pwsh refer exclusively to PowerShell Core. Pretend Windows PowerShell doesn’t exist; it shouldn’t, and while Microsoft has yet to announce its official EOL, the trend is clear: Core is the future.

PowerShell: More than a Shell

PowerShell is more than simply a shell. It’s an intuitive programming environment and scripting language that’s been wrapped inside a feature-packed REPL and heavily refined with an intentional focus on better user experience via consistency and patterns without loss of execution speed or efficiency.

Basically, if you can do it in bash or zsh, you can do it – and a whole lot more – in PowerShell. In most cases, you can do it faster and easier, leading to a far more maintainable and portable final result (e.g. tool, library, etc.) that, thanks to PowerShell Core’s multi-platform nature, is arguably more portable than bash/zsh (which require non-trivial effort to install/update/configure on Windows).

And with modules from the PowerShell Gallery, it can be extended even further, with secrets management capabilities and even a system automation framework known as “Desired State Configuration” (DSC).

Note: DSC is, as of this writing, a Windows-Only feature. Starting in PowerShell Core 7.2 they moved it out of PowerShell itself and into a separate module to enable future portability. In DSC version 3.0, currently in “preview”, it’s expected to be available on Linux. Whether or not I’d trust a production Linux machine with this, however, is another topic entirely. Caveat emptor.

A Scripting Language So Good It’ll Shock You

PowerShell really shines as a fully-featured scripting language with one critical improvement not available in bash or zsh: objects with methods and properties of various data types.

Say goodbye to the arcane insanity that is sed and associated madness! With PowerShell, you don’t get back mere strings, you get back honest-to-goodness OBJECTS with properties and methods, each of which corresponds to a data type!

No more being afraid to modify the output of that Perl script from 1998 that’s holding your entire infrastructure together because it’ll crash everything if you put an extra space in the output, or – *gasp* – output JSON!

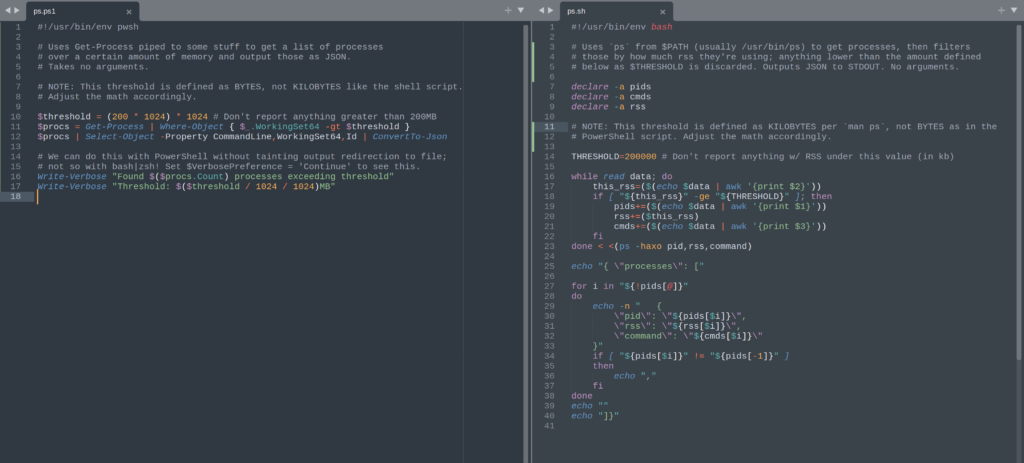

Purely for the purposes of demonstration, take a look at these two scripts for obtaining a list of currently running processes that exceed a given amount of memory. I’m no shell script whiz by any means, but even if /usr/bin/ps had a consistent, unified implementation across BSD, MacOS, Linux and other POSIX operating systems, you’d still have a much harder time using bash than you do with PowerShell:

PowerShell (left): 3 lines. bash (right): 25. You do the math.

Rather than lengthen an article already in the running for “TL;DR of the month”, I’ll just link to gists for those scripts:

Disclaimer: I never claimed to be a shell script whiz, but I’d be surprised to see any bash/zsh implementation do this easier without additional tools – which PowerShell clearly doesn’t need.

In the case of bash, since we have to manipulate strings directly, the output formatting is absolutely crucial; any changes, and the entire shell script falls apart. This is fundamentally fragile, which makes it error prone, which means it’s high-risk. It also requires some external tooling or additional work on the part of the script author to output valid JSON. And if you look at that syntax, you might go blind!

By contrast, what took approximately 25-ish lines in bash takes only three with PowerShell, and you could even shorten that if readability wasn’t a concern. Additionally, PowerShell allows you to write data to multiple output “channels”, such as “Verbose” and “Debug”, in addition to STDOUT. This way I can run the above PowerShell script, redirect its output to a file, and still get that diagnostic information on my screen, but NOT in the file, thus separating the two. Put simply, I can output additional information without STDERR on a per-run basis whenever I want, without any chance of corrupting the final output result, which may be relied upon by other programs (redirection to file, another process, etc.)

Plug In to (optional) Naming Conventions

Unlike the haphazard naming conventions mess that is the *nix shell scripting and command world, the PowerShell community has established a well-designed, explicit, and consistent set of naming conventions for commands issued in the shell, be they available as modules installed by default, obtained elsewhere, or even stuff you write yourself. You’re not forced into these naming conventions of course, but once you’ve seen properly-named commands in action, you’ll never want to go back. The benefits become self-evident almost immediately:

| *nix shell command or utility | PowerShell equivalent | Description |

|---|---|---|

cd | Set-Location | Change directories |

pushd / popd | Push-Location / Pop-Location | push/pop location stack |

pwd | Get-Location | What directory am I in? |

cat | Get-Content | Display contents of a file (generally plain text) on STDOUT |

which | Get-Command | Find out where a binary or command is, or see which one gets picked up from $PATH first |

pbcopy / pbpaste on MacOS (Linux or BSD, varies) | Get-Clipboard / Set-Clipboard | Retrieve or Modify the contents of the clipboard/paste buffer on your local computer |

echo -e "\e[31mRed Text\e[0m | Write-Host -ForegroundColor Red "Red Text" | Write some text to the console in color (red in this example) |

No, you don’t literally have to type

Set-Locationevery single time you want to change directories. Good ‘olcdstill works just fine, as do dozens of common *nix commands. Basically just use it like you wouldbashand it “Just Works™”.To see all aliases at runtime, try

Get-Alias. To discover commands, tryGet-Command *whatever*. Tab-completion is also available out-of-the-box.

See the pattern? All these commands are in the form of Verb-Noun. They all start with what you want to do, then end with what you want to do it TO. Want to WRITE stuff to the HOST‘s screen? Write-Host. Want to GET what LOCATION (directory) you’re currently in? Get-Location. You could also run $PWD | Write-Host to take the automatic variable $PWD – present working directory – and pipe that to the aforementioned echo equivalent. (To simplify it even further, the pipe and everything after it aren’t technically required unless in a script!)

Most modules for PowerShell follow these conventions as well, so command discoverability becomes nearly automatic. With known, established, consistent conventions, you’ll never wonder what some command is called ever again because it’ll be easily predictable.

And if not, there’s a real easy way to find out what’s what:

Get-Verb

# Shows established verbs with descriptions of each

Get-Command -Verb *convert*

# Shows all commands w/ "convert" in the name

# For example, ConvertFrom-Json, ConvertTo-Csv, etc.

Get-Command -Noun File

# What's the command to write stuff to a file?

# Well, look up all the $VERB-File commands to start!

# See also: Get-Command *file* for all commands with "file" in the nameNote that cAsE sEnSiTiViTy is a little odd with PowerShell on *nix:

| If the command/file is from… | Is it cAsE sEnSiTiVe? | Are its args cAsE sEnSiTiVe? |

$PATH or the underlying OS/filesystem | YES | Generally Yes Depends on the implementation |

PowerShell Itself (cmdlet) | No | Generally No Possible, but not common |

Note that there are always exceptions to every rule, so there are times the above may fail you. Snowflakes happen. My general rule of thumb, which has never steered me wrong in these cases, is this:

Assume EVERYTHING is cAsE sEnSiTiVe.

If you’re wrong, it works. If you’re right, it works. Either way, you win!

Documentation: The Path of Least Resistance

Ever tried to write a formatted man page? It’s painful:

.PP

The \fB\fCcontainers.conf\fR file should be placed under \fB\fC$HOME/.config/containers/containers.conf\fR on Linux and Mac and \fB\fC%APPDATA%\\containers\\containers.conf\fR on Windows.

.PP

\fBpodman [GLOBAL OPTIONS]\fP

.SH GLOBAL OPTIONS

.SS \fB--connection\fP=\fIname\fP, \fB-c\fP

.PP

Remote connection name

.SS \fB--help\fP, \fB-h\fP

.PP

Print usage statementThis is a small excerpt from a portion of the

podmanmanual page. Note the syntax complexity and ambiguity.

By contrast, you can document your PowerShell functions with plain-text comments right inside the same file:

#!/usr/bin/env pwsh

# /home/myuser/.config/powershell/profile.ps1

<#

.SYNOPSIS

A short one-liner describing your function

.DESCRIPTION

You can write a longer description (any length) for display when the user asks for extended help documentation.

Give all the overview data you like here.

.NOTES

Miscellaneous notes section for tips, tricks, caveats, warnings, one-offs...

.EXAMPLE

Get-MyIP # Runs the command, no arguments, default settings

.EXAMPLE

Get-MyIP -From ipinfo.io -CURL # Runs `curl ipinfo.io` and gives results

#>

function Get-MyIP { ... }Given the above example, an end-user could simply type help Get-MyIP in PowerShell and be presented with comprehensive help documentation including examples within their specified $PAGER (e.g. less or my current favorite, moar). You can even just jump straight to the examples if you want, too:

> Get-Help -Examples Get-History

NAME

Get-History

SYNOPSIS

Gets a list of the commands entered during the current session.

[...]

--------- Example 2: Get entries that include a string ---------

Get-History | Where-Object {$_.CommandLine -like "*Service*"}

[...]I’ve long said that if a developer can’t be bothered to write at least something useful about how to use their product or tool, it ain’t worth much. Usually nothing. Because nobody has time to go spelunking through your code to figure out how to use your tool – if we did, we’d write our own.

That’s why anything that makes documentation easier and more portable is a win in my book, and in this category, PowerShell delivers. The syntax summaries and supported arguments list are even generated dynamically by PowerShell! You don’t have to write that part at all!

The One Caveat: Tooling

Most tooling for *nix workflows is stuck pretty hard in sh land. Such tools have been developed, in some cases, over multiple decades, with conventions unintentionally becoming established in a somewhat haphazard manner, though without much (if any) thought whatsoever toward the portability of those tools to non-UNIX shells.

And let’s face it, that’s 100% Microsoft’s fault. No getting around the fact that they kept PowerShell a Windows-only, closed-source feature for a very long time, and that being the case, why should developers on non-Windows platforms have bothered? Ignoring it was – note the past tense here – entirely justified.

But now that’s all changed. Modern PowerShell isn’t at all Windows-only anymore, and it’s fully open source now, too. It works on Linux, MacOS, and other UNIX-flavored systems, too (though you likely have to compile from source) along with Windows, of course. bash, while ubiquitous on *nix platforms, is wildly inconsistent in which version is deployed or installed, has no built-in update notification ability, and often requires significant manual work to implement a smooth and stable upgrade path. It’s also non-trivial to install on Windows.

PowerShell, by contrast, is available on almost as many platforms (though how well tested it is outside the most popular non-Windows platforms is certainly up for debate), is available to end-users via “click some buttons and you’re done” MSI installers for Windows or PKG installers on MacOS, and is just as easy to install on *nix systems as bash is on Windows machines (if not easier in some cases; e.g. WSL).

Additionally, PowerShell has a ton of utilities available out-of-the box that bash has to rely on external tooling to provide. This means that any bash script that relies on that external tooling can break if said tooling has unaccounted for implementation differences. If this sounds purely academic, consider the curious case of ps on Linux:

$ man ps

[...]

This version of ps accepts several kinds of options:

1 UNIX options, which may be grouped and must be preceded by a dash.

2 BSD options, which may be grouped and must not be used with a dash.

3 GNU long options, which are preceded by two dashes.

Options of different types may be freely mixed, but conflicts can appear.

[...] due to the many standards and ps implementations that this ps is

compatible with.

Note that ps -aux is distinct from ps aux. [...]Source:

psmanual from Fedora Linux 35

By contrast, PowerShell implements its own Get-Process cmdlet (a type of shell function, basically) so that you don’t even need ps or anything like it at all. The internal implementation of how that function works varies by platform, but the end result is the same on every single one. You don’t have to worry about the way it handles arguments snowflaking from Linux to MacOS, because using it is designed to be 100% consistent across all platforms when relying purely on PowerShell’s built-in commands.

And, if you really do need an external tool that is entirely unaware of PowerShell’s existence? No problem: you can absolutely (maybe even easily?) integrate existing tools with PowerShell, if you, or the authors of that tool, so desire.

But, IS there such a desire? Does it presently exist?

Probably not.

Open source developers already work for free, on their own time, to solve very complex problems. They do this on top of their normal “day job,” not instead of it (well, most, anyway).

Shout-out to FOSS contributors: THANK YOU all, so much, for what you do! Without you, millions of jobs and livelihoods would not exist, so have no doubt that your efforts matter!

It’s beyond ridiculous to expect that these unsung heroes would, without even being paid in hugs, let alone real money, add to their already superhuman workload by committing to support a shell they’ve long thought of as “yet another snowflake” with very limited adoption or potential, from a company they’ve likely derided for decades, sometimes rightly so. You can’t blame these folks for saying “nope” to PowerShell, especially given its origin story as a product from a company that famously “refuses to play well with others.”

And therein lies the problem: many sh-flavored tools just don’t have any good PowerShell integrations or analogs (yet). That may change over time as more people become aware of just how awesome modern pwsh can be (why do you think I wrote this article!?). But for the time being, tools that developers like myself have used for years, such as rvm, rbenv, asdf, and so on, just don’t have any officially supported way to be used within PowerShell.

The good news is that this is a solvable problem, and in more ways than one!

Overload Limitations With Your Own PowerShell Profile

The most actionable of these potential solutions is the development of your own pwsh profile code that will sort of fake a given command, within PowerShell only, to allow you to use the same command/workflow you would have in bash or zsh, implemented as a compatibility proxy under the hood within PowerShell.

For a real-world example, here’s a very simplistic implementation of a compatibility layer to enable rbenv and bundle commands (Ruby development) in PowerShell (according to my own personal preferences) by delegating to the real such commands under the hood:

#

# Notes:

# 1. My $env:PATH has already been modified to find rbenv in this example

# 2. See `help about_Splatting`, or the following article (same thing), to understand @Args

# https://docs.microsoft.com/en-us/powershell/module/microsoft.powershell.core/about/about_splatting?view=powershell-7.2

# Oversimplification: @Args = "grab whatever got passed to this thing, then throw 'em at this other thing VERBATIM"

#

function Invoke-rbenv {

rbenv exec @Args

}

function irb {

Invoke-rbenv irb @Args

}

function gem {

Invoke-rbenv gem @Args

}

function ruby {

Invoke-rbenv ruby @Args

}

function bundle {

Invoke-rbenv bundle @Args

}

function be {

Invoke-rbenv bundle exec @Args

}With this in place, I can type out commands like be puma while working on a Rails app, and have that delegated to rbenv‘s managed version of bundler, which then execs that command for me. And it’s all entirely transparent to me!

This is just one example and an admittedly simplistic one at that. Nonetheless, it proves that using PowerShell as your daily driver is not only possible but feasible, even when you need to integrate with other tools that are entirely unaware of PowerShell’s existence.

But, we can go a step further with the recently-released PowerShell Crescendo. While I have yet to look into this all that much, essentially it provides a way for standard *nix tools to have their output automatically transformed from basic strings into real PowerShell objects at runtime. You have to write some parsing directives to tell PowerShell how to interpret the strings generated by some program, but once that’s done you’re set: you’ll have non-PowerShell tools generating real PowerShell objects without any change to the tools themselves at all.

Jump on the Voltswagon!

If you’re not convinced by now, something’s wrong with you.

For the rest of you out there, you’ve got some options for installation:

- Use the packages provided by Microsoft (

deb,rpm) (sudorequired) - Grab a precompiled Linux tarball then unpack it somewhere and run:

/path/to/powershell/7.2/pwsh(nosudorequired) - Mac users can

brew install powershell --cask. (sudorequired for.pkginstaller)

Antipattern: Do NOT change your default login shell

Don’t do this:

chsh -s $(which pwsh)Modify your terminal emulator profile instead.

Just a quick tip: while PowerShell works fine as a default login shell and you can certainly use it this way, other software may break if you do this because it may assume your default login shell is always bash-like and not bother to check. This could cause some minor breakage here and there.

But the real reason I advise against this is more to protect yourself from yourself. If you shoot yourself in the foot with your pwsh configuration and totally bork something, you won’t have to worry too much about getting back to a working bash or zsh configuration so you can get work done again, especially if you’re in an emergency support role or environment.

When you’re first learning, fixing things isn’t always a quick or easy process, and sometimes you just don’t have time to fiddle with all that, so it’s good to have a “backup environment” available just in case you have to act fast to save the day.

Don’t interpret this as “PowerShell is easy to shoot yourself in the foot with” – far from it. Its remarkable level of clarity and consistency make it very unlikely that you’ll do this, but it’s still possible. And rather than just nuking your entire PowerShell config directory and starting from scratch, it’s far better to pick it apart and make yourself fix it, because you learn the most when you force yourself through the hard problems. But you won’t always have time to do that, especially during your day job, so having a fallback option is always a good idea.

First Steps

Once installed, I recommend you create a new profile specifically for PowerShell in your terminal emulator of choice, then make that the default profile (don’t remove or change the existing one if you can help it; again, have a fallback position just in case you screw things up and don’t have time to fix it).

Specifically, you want your terminal emulator to run the program pwsh, located wherever you unpacked your tarball. If you installed it via the package manager, it should already be in your system’s default $PATH so you probably won’t need to specify the location (just pwsh is fine in that case). No arguments necessary.

With that done, run these commands first:

PS > Update-Help

PS > help about_TelemetryThe first will download help documentation from the internet so you can view help files in the terminal instead of having to go to a browser and get a bunch of outdated, irrelevant results from Google (I recommend feeding The Duck instead).

The second will tell you how to disable telemetry from being sent to Microsoft. It’s not a crucial thing, and I don’t think Microsoft is doing anything shady here at all, but I always advise disabling telemetry in every product you can, every time you can, everywhere you can, just as a default rule.

More importantly, however, this will introduce you to the help about_* documents, which are longer-form help docs that explain a series of related topics, instead of just one command. Seeing a list of what’s available is nice and easy: just type help about_ then mash the TAB key a few times. It’ll ask if you want to display all hundred-some-odd options; say Y. Find something that sounds interesting, then enter the entire article name, e.g. help about_Profiles or help about_Help, for example.

Next, check out my other article on this blog about customizing your PowerShell prompt!

Roll the Dice

bash and zsh are great tools: they’re wicked fast, incredibly stable, and have decades of battle-tested, hard-won “tribal knowledge” built around them that’s readily available via your favorite search engine.

But they’re also antiquated. They’re based on a simpler series of ideas that were right for their time, but fundamentally primitive when compared to the same considerations in mind when PowerShell was designed.

Sooner or later you just have to admit that something more capable exists, and that’s when you get to make a choice: stick with what you know, safe in your comfort zone, or roll the dice on something that could potentially revolutionize your daily workflow.

Once I understood just a fraction of the value provided by pwsh, that choice became a no-brainer for me. It’s been roughly six months since I switched full-time, and while I still occasionally have a few frustrations here and there, those cases are very few and far between (it’s been at least two months since the last time something made me scratch my head and wonder).

But those frustrations are all part of the learning process. I see even more “WTF?” things with bash or zsh than I do with pwsh, by far! Those things are rarely easy to work out, and I struggle with outdated documentation from search results in nearly every case!

But with PowerShell, figuring out how to work around the problem – if indeed it is a problem, and not my own ignorance – is much easier because I’m not dealing with an arcane, arbitrary syntax from hell. Instead, I have a predictable, standardized, consistent set of commands and utilities available to me that are mostly self-documenting and available offline (not some archived forum post from 2006). On top of that, I have real classes and objects available to me, and a built-in debugger (with breakpoints!) that I can use to dig in and figure things out!

So, why are we still using system shells that are based on paradigms from the 1980’s? Are you still rocking a mullet and a slap bracelet, too?

Just because “that’s the way it’s always been” DOESN’T mean that’s the way it’s always gotta be.

PowerShell is the first real innovation I’ve seen in our field in a long time. Generally replete with “social” networks, surveillance profiteering, user-generated “content” and any excuse to coerce people into subscriptions, our industry repackages decades-old innovations ad infinitum, even when new approaches are within reach, desperately needed, and certain to be profitable.

So in the rare case that something original that is actually useful, widely-available and open source finally does see the light of day, I get very intrigued. I get excited. And in this case, I “jumped on the Voltswagon!”

And you should, too!

The author would like to thank Chris Weibel for his help with some of those electricity puns, and Norm Abramovitz for his editorial assistance in refining this article.