Welcome to part two of our QUAKE-Speedrun. This time we will deploy Argo and use it to automate deployments of our additional components on our Kubernetes Cluster to start building out our Platform.

First, let’s take a look at the Argo Project Modules to understand what their Job is.

Argo Project:

ArgoCD

“Declarative Continuous Delivery for Kubernetes”

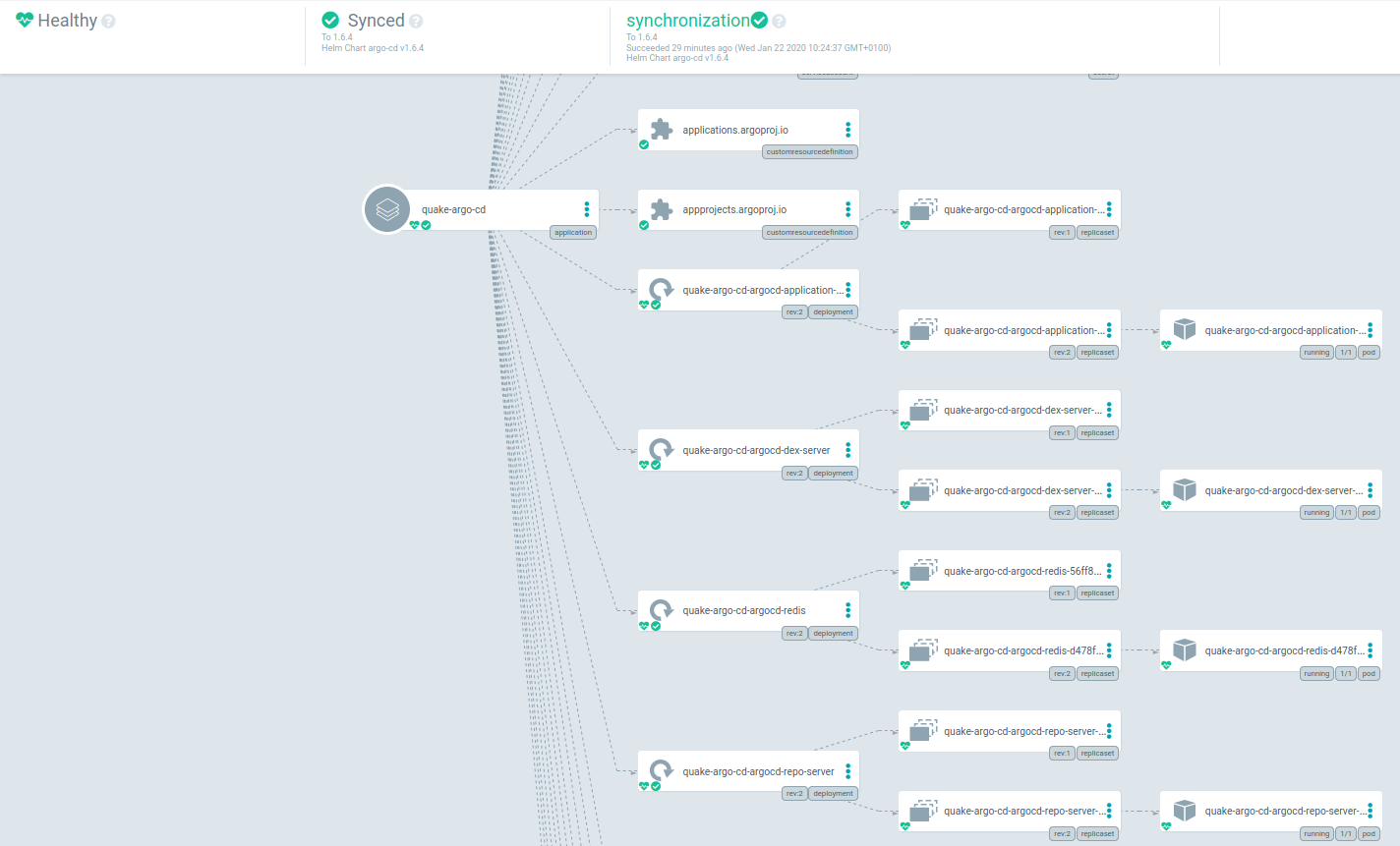

ArgoCD deploys Helm/Kube-Specs/KSonnet/Kustomize units via Argos CustomResourceDefinition Application. It also supports pulling your Applications YAMLs from a Git Repo, comes with an amazing UI capable of dependency lookup (e.g. Deployment > ReplicaSet/StatefulSet/… > Pods), Log & Kubernetes Event streaming included.

Argo-Rollouts

“Argo Rollouts introduces a new custom resource called a Rollout to provide additional deployment strategies such as Blue Green and Canary to Kubernetes. The Rollout custom resource provides feature parity with the deployment resource with additional deployment strategies. Check out the Deployment Concepts for more information on the various deployment strategies.”

Let’s just say we want those things. Who doesn’t like to be in control? Jokes aside, depending on your Workloads, native Blue-Green and Canary are really useful for no downtime deployments.

Argo-Workflows

“Argo Workflows is an open source container-native workflow engine for orchestrating parallel jobs on Kubernetes. Argo Workflows is implemented as a Kubernetes CRD (Custom Resource Definition).”

If you’re familiar with Concourse, think of Jobs/Tasks. In Jenkins terms, it’s Stages/Steps. Input/Output artifacts included. Essentially, it’s a way of defining a chain of containers which are run in sequence and are able to pass on/transfer build-artifacts.

Argo-Events

“Argo Events is an event-based dependency manager for Kubernetes which helps you define multiple dependencies from a variety of event sources like webhook, s3, schedules, streams etc. and trigger Kubernetes objects after successful event dependencies resolution.”

Let’s deploy Argo

Now that we know what it is supposed to do, let’s get it working.

quake –loadout

kubectl create namespace quake-system &> /dev/nulllocal INDEX=0

while true; do

REPO_URL=$(yq r ${REPO_ROOT}/helm-templates/helm-sources.yml "QUAKE_HELM_SOURCES.${INDEX}.url")

REPO_NAME=$(yq r ${REPO_ROOT}/helm-templates/helm-sources.yml "QUAKE_HELM_SOURCES.${INDEX}.name")

if [[ "${REPO_NAME}" == "null" ]]; then

break

fi

helm repo add "${REPO_NAME}" "${REPO_URL}"

((INDEX+=1))

done

helm repo update

local INDEX=0

while true; do

local INSTALL_NAME=$(yq r ${REPO_ROOT}/helm-templates/helm-sources.yml "QUAKE_HELM_INSTALL_CHARTS.${INDEX}.name")

local CHART_VERSION=$(yq r ${REPO_ROOT}/helm-templates/helm-sources.yml "QUAKE_HELM_INSTALL_CHARTS.${INDEX}.version")

if [[ "${INSTALL_NAME}" == "null" ]]; then

break

fi

local CHART_NAME=$(echo ${INSTALL_NAME} | sed 's#.*/##')

local HELM_STATE=${REPO_ROOT}/state/helm/${QUAKE_CLUSTER_NAME}.${QUAKE_TLD}

mkdir -p ${HELM_STATE} &> /dev/null

yq r \

<( kops toolbox template \

--template helm-templates/helm-values-template.yml \

--values ${REPO_ROOT}/state/kops/vars-${QUAKE_CLUSTER_NAME}.${QUAKE_TLD}.yml \

) \

${CHART} ${HELM_STATE}/${CHART}-values.yml

helm upgrade --wait --install "quake-${CHART_NAME}" "${INSTALL_NAME}" \

--values ${HELM_STATE}/${CHART}-values.yml \

--version ${CHART_VERSION} \

--namespace quake-system

((INDEX+=1))

done

First, we parse our helm-sources.yml that contains the info about the Charts, their desired Version, and the respective Helm-Repo where they are hosted.

Then, we interpolate the helm-values-template with the data that

Terraform outputted and create a values.yml containing our merged config items for each Chart we’re going to deploy.

Finally, we use `helm upgrade –wait –install` as an idempotent

way of deploying/upgrading our chart with the our fresh out the templating values.

You can now inspect your quake-system namespace by running:

kubectl get all -n quake-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/quake-argo-cd-argocd-application-controller ClusterIP 100.68.155.246 8082/TCP 24h

service/quake-argo-cd-argocd-dex-server ClusterIP 100.65.68.36 5556/TCP,5557/TCP 24h

service/quake-argo-cd-argocd-redis ClusterIP 100.69.164.236 6379/TCP 24h

service/quake-argo-cd-argocd-repo-server ClusterIP 100.68.32.95 8081/TCP 24h

service/quake-argo-cd-argocd-server LoadBalancer 100.68.217.5 ...elb.amazonaws.com 80:32606/TCP,443:30637/TCP 24h

service/quake-argo-ui ClusterIP 100.66.143.38 80/TCP 24hservice/quake-external-dns ClusterIP 100.67.57.61 7979/TCP 24h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/quake-argo-cd-argocd-application-controller 1/1 1 1 24h

deployment.apps/quake-argo-cd-argocd-dex-server 1/1 1 1 24h

deployment.apps/quake-argo-cd-argocd-redis 1/1 1 1 24h

deployment.apps/quake-argo-cd-argocd-repo-server 1/1 1 1 24h

deployment.apps/quake-argo-cd-argocd-server 1/1 1 1 24h

deployment.apps/quake-argo-events-gateway-controller 1/1 1 1 24h

deployment.apps/quake-argo-events-sensor-controller 1/1 1 1 24h

deployment.apps/quake-argo-ui 1/1 1 1 24h

deployment.apps/quake-argo-workflow-controller 1/1 1 1 24h

deployment.apps/quake-aws-alb-ingress-controller 1/1 1 1 24h

deployment.apps/quake-external-dns 1/1 1 1 24h

NAME DESIRED CURRENT READY AGE

replicaset.apps/quake-argo-cd-argocd-application-controller-6985bf866d 0 0 0 24h

replicaset.apps/quake-argo-cd-argocd-application-controller-85bfd47868 1 1 1 23h

replicaset.apps/quake-argo-cd-argocd-dex-server-6d77949f5d 1 1 1 23h

replicaset.apps/quake-argo-cd-argocd-dex-server-6f969b7bb 0 0 0 24h

replicaset.apps/quake-argo-cd-argocd-redis-5548685fbb 1 1 1 23h

replicaset.apps/quake-argo-cd-argocd-redis-db7bdbf86 0 0 0 24h

replicaset.apps/quake-argo-cd-argocd-repo-server-67bbfb4f5f 1 1 1 23h

replicaset.apps/quake-argo-cd-argocd-repo-server-bb4b8bf79 0 0 0 24h

replicaset.apps/quake-argo-cd-argocd-server-55c6798674 0 0 0 24h

replicaset.apps/quake-argo-cd-argocd-server-576d6ff898 1 1 1 23h

replicaset.apps/quake-argo-events-gateway-controller-65846fc954 0 0 0 24h

replicaset.apps/quake-argo-events-gateway-controller-776fff4c48 1 1 1 23h

replicaset.apps/quake-argo-events-sensor-controller-7954475947 0 0 0 24h

replicaset.apps/quake-argo-events-sensor-controller-84879ffb4f 1 1 1 23h

replicaset.apps/quake-argo-ui-6798554bd 1 1 1 24h

replicaset.apps/quake-argo-workflow-controller-6bdbb96f86 1 1 1 24h

replicaset.apps/quake-aws-alb-ingress-controller-6768b6c6bd 0 0 0 24h

replicaset.apps/quake-aws-alb-ingress-controller-78474fbdfd 1 1 1 23h

replicaset.apps/quake-external-dns-7c8cc75876 0 0 0 24h

replicaset.apps/quake-external-dns-85cbdcf957 1 1 1 23hNow that we have Argo deployed, let’s register our newly deployed components as Argo Applications by running:

quake –template

local CHARTINDEX=0

while CHART=$(yq r ${REPO_ROOT}/helm-templates/helm-sources.yml QUAKE_HELM_INSTALL_CHARTS.$CHARTINDEX.name); do

if [[ "${CHART}" == "null" ]]; then

break

fi

CHART_NAME=$(echo "${CHART}" | sed 's#./##')

CHART_REPO_NAME=$(echo "${CHART}" | sed 's#/.##')

REPO_INDEX=0

until [[ "$(yq r ${REPO_ROOT}/helm-templates/helm-sources.yml QUAKE_HELM_SOURCES.${REPO_INDEX}.name)" == "${CHART_REPO_NAME}" ]]; do

((REPO_INDEX+=1))

done

mkdir -p ${REPO_ROOT}/state/argo/${QUAKE_CLUSTER_NAME}.${QUAKE_TLD}

kops toolbox template \

--fail-on-missing=false \

--template ${REPO_ROOT}/argo-apps/helm-app-template.yml \

--set APP_NAME="quake-${CHART_NAME}" \

--set APP_NAMESPACE="quake-system" \

--set APP_HELM_REPO_URL="$( yq r ${REPO_ROOT}/helm-templates/helm-sources.yml QUAKE_HELM_SOURCES.${REPO_INDEX}.url)" \

--set APP_HELM_CHART_VERSION="$( yq r ${REPO_ROOT}/helm-templates/helm-sources.yml QUAKE_HELM_INSTALL_CHARTS.${CHARTINDEX}.version )" \

--set APP_HELM_CHART_NAME="${CHART_NAME}" \

--set APP_VALUES_INLINE_YAML="$( cat ${REPO_ROOT}/state/helm/${QUAKE_CLUSTER_NAME}.${QUAKE_TLD}/${CHART_NAME}-values.yml)"

> ${REPO_ROOT}/state/argo/${QUAKE_CLUSTER_NAME}.${QUAKE_TLD}/${CHART_NAME}-application.yml

kubectl apply -f ${REPO_ROOT}/state/argo/${QUAKE_CLUSTER_NAME}.${QUAKE_TLD}/${CHART_NAME}-application.yml -n quake-system

((CHARTINDEX+=1))

done

This will create the manifests and register the helm charts we just deployed with Argo, thus making it aware about the deployed Kubernetes Resources it is now tasked with managing.

You can find your Application manifests under state/argo/${QUAKE_CLUSTER_NAME}.${QUAKE_TLD}

Finally, the script uses kubectl apply, because Argo Applications are a Custom Resource Definition and thus can be manipulated via kubectl.

It may take a while for the Argo LoadBalancer DNS to propagate, but you should usually be able to access your Argo Instance @ argo.${QUAKE_CLUSTER_NAME}.${QUAKE_TLD} after around 15mins. If not specified, the default admin password for Argo is the ${Argo_Server_Server_Pod} name. But we actually did generate and specify a password.

cd ${REPO_ROOT}/terraform

terraform output QUAKE_ARGO_PASSWORD

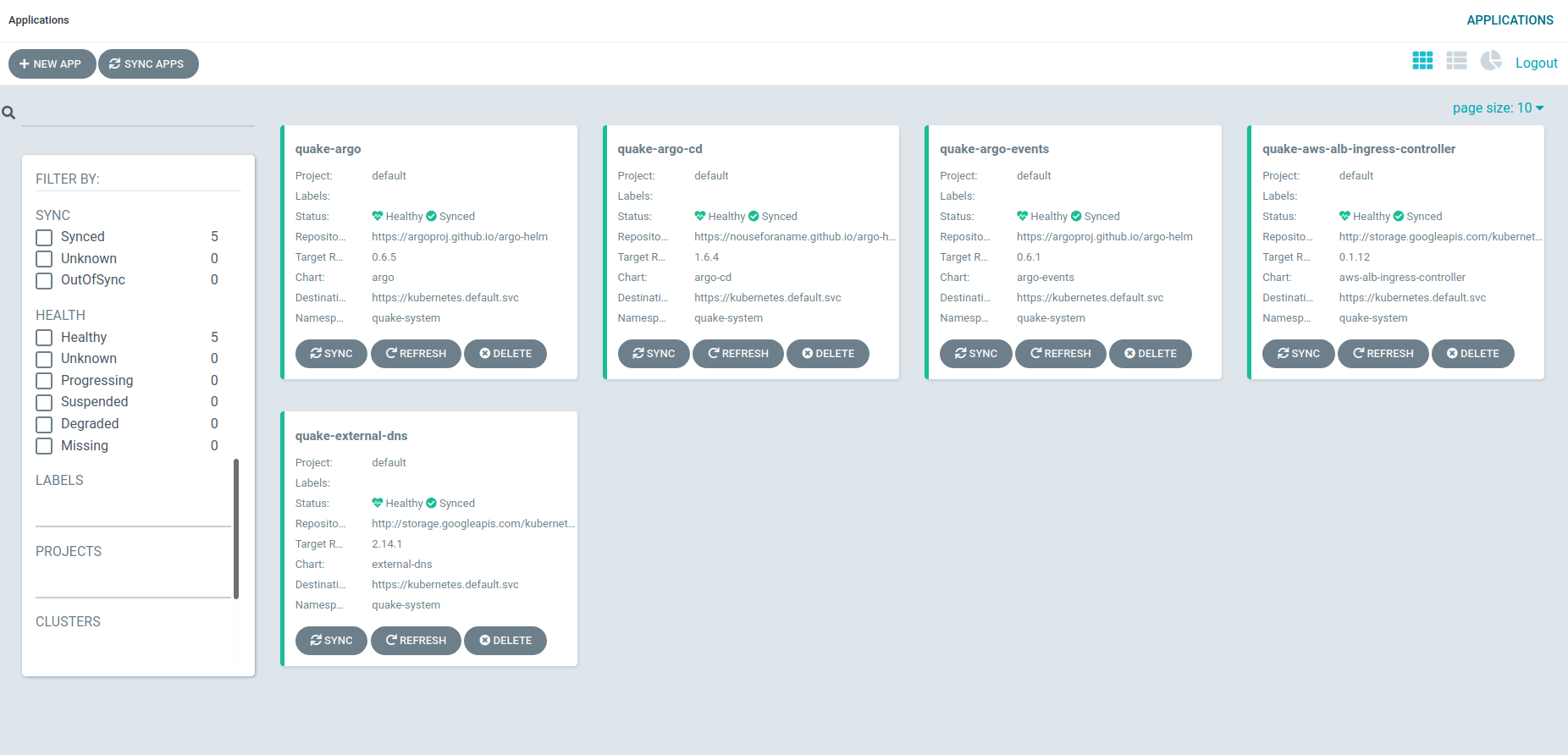

To finish this post off, let us login and look at what we deployed.

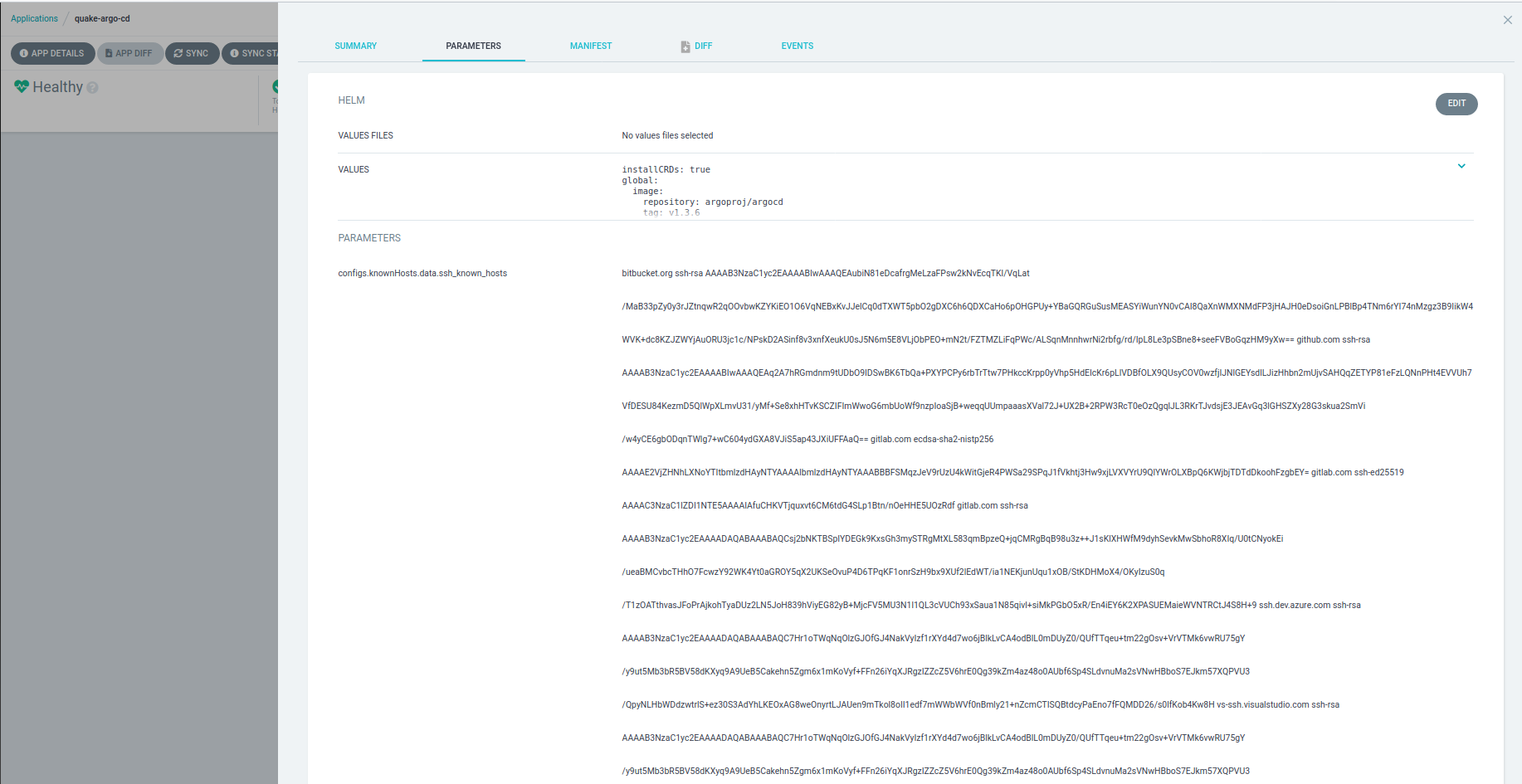

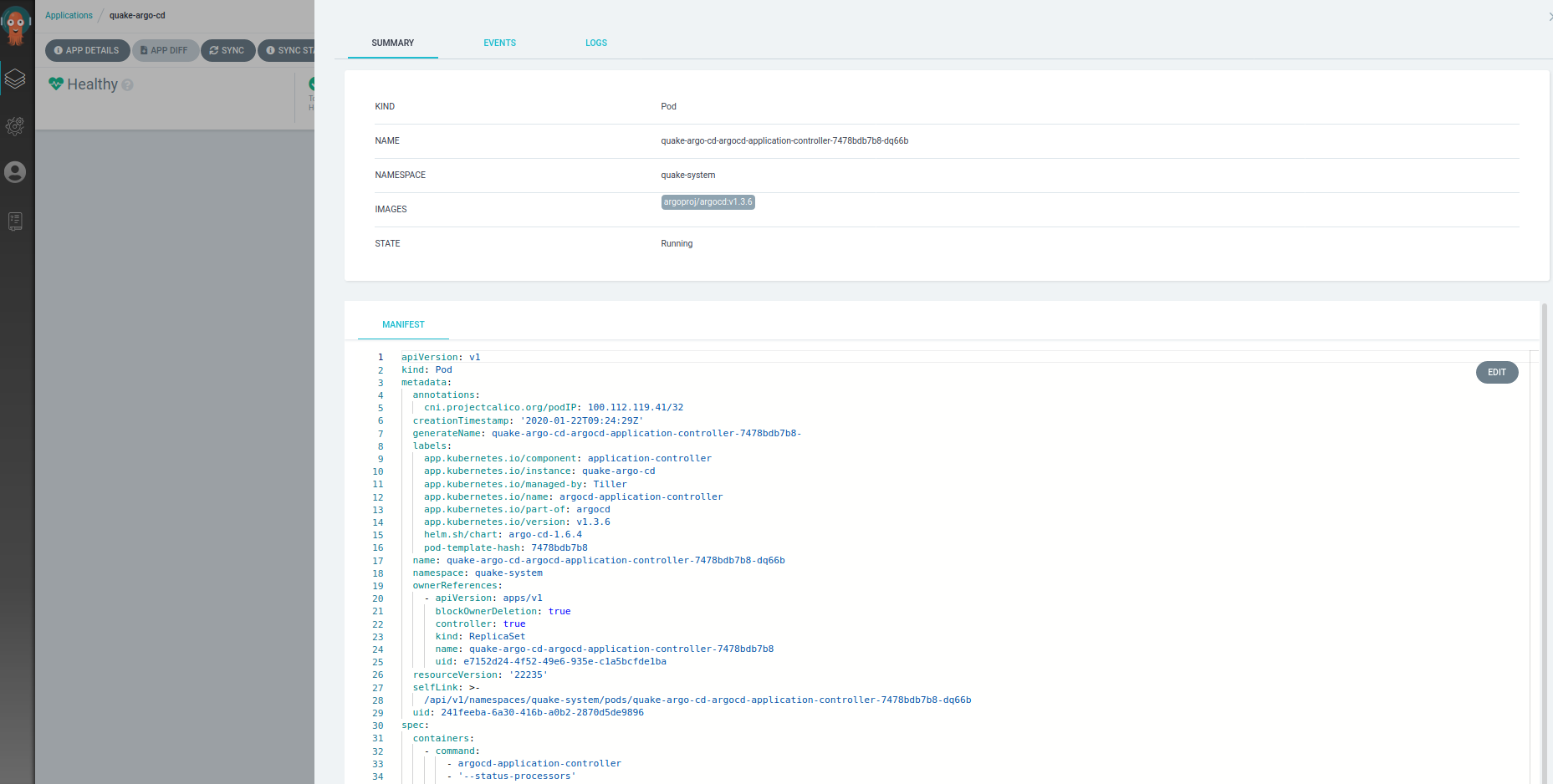

As you can see, Argo doubles as a quite resourceful Kubernetes UI. You can access most of the Information required in day to day work via its features. You can test/change values for your Helm Deployments (and other Apps/Resources Argo watches) directly from the UI. Watch your deployments propagate, stream your pod logs or check events on your resources.

If you want to dig deeper into Argo on your own you can check out their App Examples, you have everything in place to play around.

That’s it for today.

If you’re interested in learning more, check the first Recap Post for more info about ArgoCD and some Rollout Experiments. An additional Recap post for Events and Rollouts is currently in the writing and will soon be release, so make sure to check our Blogs regularly, you’ll find many interesting adventures in the cloud space.

As always, thank your for reading and let me know your thoughts in the comments.

If you should find an issue, please create one on GitHub.