This is the third part of a multi-part series on designing and building non-trivial containerized solutions. We’re making a radio station using off-the-shelf components and some home-spun software, all on top of Docker, Docker Compose, and eventually, Kubernetes.

In this part, we’re going to take the notes we took during the last part (you did take notes, didn’t you?) and distill them down into some infrastructure-as-code. It all starts with Docker Compose.

Welcome back! If you’ve been following along, you should now have a directory of .opus audio files and two containers streaming audio out to anyone who connects. If you haven’t been following along, go read [the] [other parts] [first]. Then come back.

The Dockerfiles we have made so far are eminently portable. Anyone can take those little recipes and rebuild our container images, more or less. The images themselves are also portable – we could docker push them up to DockerHub and let the world enjoy their container-y goodness.

What’s not so portable is how we run and wire those containers up. That part is actually quite proprietary, dense, and easily forgotten. Let’s rectify that. Let’s use Docker Compose.

Docker Compose is a small-ish orchestration system for a single Docker host. It lets us specify how we want our containers run; what ports to forward, what volumes to mount, etc. It also has a few tricks up its sleeve that will make our lives better. Best of all, Docker Compose recipes can be shared with others who also like building bespoke Internet radio stations. We are legion. And we use Docker.

What’s in a recipe? It starts, as most things in the container world do, with a YAML file:

$ cat docker-compose.yml---

version: '3'We’ll be using version 3 of the Compose specification. That’s not terribly important right now, but there are certain concepts that won’t work if we’re not on a new enough version.

NOTE: If you have been following along, you’ve probably got containers still running from our previous experiments. Luckily, we specified the --rm flag when we ran these containers, so all we need to do to clean up is stop them:

$ docker stop icecast

$ docker stop liquid

Docker Compose deals in containers, but it does so in scalable sets of identical containers that it calls “services”. For our immediate purposes, we won’t be scaling these things out, so a service is effectively a container, and vice versa.

We’ll start by adding our first service / container: Icecast2 itself.

---

version: '3'

services:

icecast:

image: filefrog/icecast2:latest

ports:

- '8000:8000'

environment:

ICECAST2_PASSWORD: whatever-you-want-it-to-beEssentially, we’re taking the docker run command and committing it to a file, in YAMLese. To get this container running, we’ll use the docker-compose command:

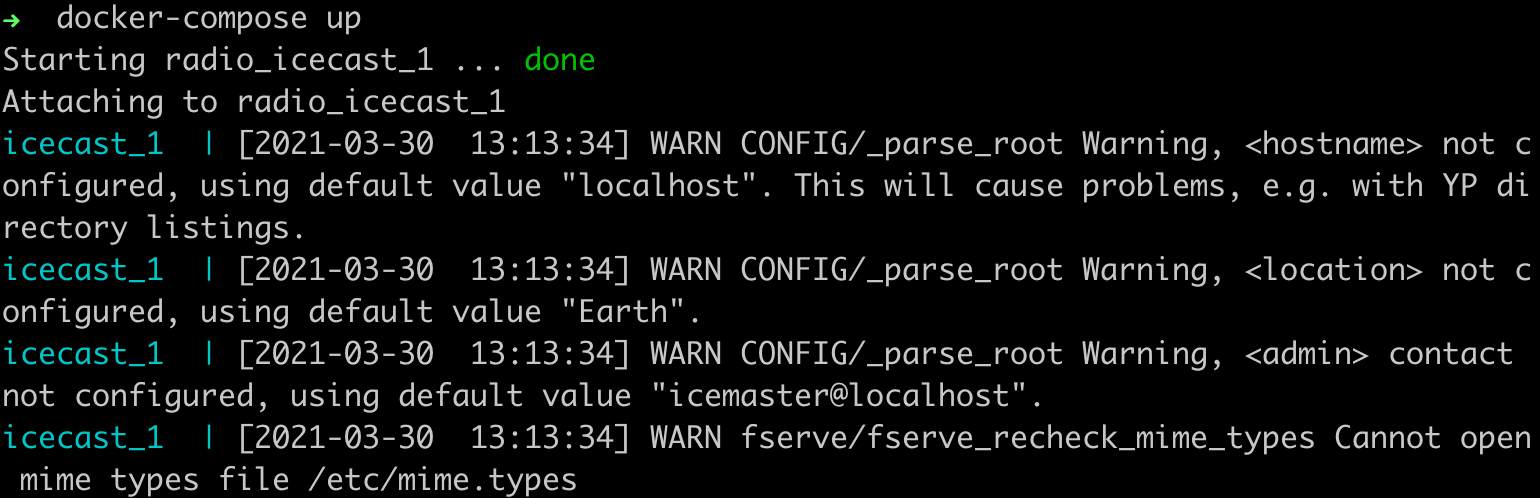

$ docker-compose upThis starts the containers up in “foreground mode” – their output will be multiplexed onto your terminal. As we add more containers (err… services), Docker Compose will start color-coding their output to help us keep them separate.

To get your shell prompt back, terminate the foreground process with a Ctrl-C.

That was so easy, let’s add our LiquidSoap service / container into the mix:

---

version: '3'

services:

icecast:

image: filefrog/icecast2:latest

ports:

- '8000:8000'

environment:

ICECAST2_PASSWORD: whatever-you-want-it-to-be

source:

image: filefrog/liquidsoap:latest

command:

- |

output.icecast(%opus,

host = "10.128.0.56",

port = 8000,

password = "whatever-you-want-it-to-be",

mount = "pirate-radio.opus",

playlist.safe(reload=120,"/radio/playlist.m3u"))

volumes:

- $PWD/radio:/radioThis time, we’re going to give docker-compose a -d flag, so that it forks into the foreground and keeps on running, while we get our shell prompt back to get EVEN MORE WORK DONE.

$ docker-compose up -dSince Docker Compose is just creating containers using Docker, we can use the docker CLI to do all the things we’re already accustomed to. For example, to see what LiquidSoap is up to, we can check the docker logs:

$ docker logs radio_source_1

docker logs radio_source_1

2021/03/30 13:16:36 >>> LOG START

2021/03/30 13:16:36 [main:3] Liquidsoap 1.4.4

2021/03/30 13:16:36 [main:3] Using: bytes=[distributed with OCaml 4.02 or above] pcre=7.4.6 sedlex=2.3 menhirLib=20201216 dtools=0.4.1 duppy=0.8.0 cry=0.6.4 mm=0.5.0 xmlplaylist=0.1.4 lastfm=0.3.2 ogg=0.5.2 vorbis=0.7.1 opus=0.1.3 speex=0.2.1 mad=0.4.6 flac=0.1.5 flac.ogg=0.1.5 dynlink=[distributed with Ocaml] lame=0.3.4 shine=0.2.1 gstreamer=0.3.0 frei0r=0.1.1 fdkaac=0.3.2 theora=0.3.1 ffmpeg=0.4.3 bjack=0.1.5 alsa=0.2.3 ao=0.2.1 samplerate=0.1.4 taglib=0.3.6 ssl=0.5.9 magic=0.7.3 camomile=1.0.2 inotify=2.3 yojson=1.7.0 faad=0.4.0 soundtouch=0.1.8 portaudio=0.2.1 pulseaudio=0.1.3 ladspa=0.1.5 dssi=0.1.2 camlimages=4.2.6 srt.types=0.1.1 srt.stubs=0.1.1 srt.stubs=0.1.1 srt=0.1.1 lo=0.1.2 gd=1.0a5

2021/03/30 13:16:36 [gstreamer.loader:3] Loaded GStreamer 1.16.2 0

2021/03/30 13:16:36 [frame:3] Using 44100Hz audio, 25Hz video, 44100Hz master.

... etc ...That was fairly painless. All of the things we can express in terms of a docker run command can be specified, in YAML (of course), for Docker Compose. This means we’ll never get to the point of wanting to “wrap up” all the Docker stuff we’ve been playing with into something more serious.

There are a few things Docker Compose gives us that we don’t (easily) get with pure docker run: a bit of DNS. Recall from the last post that when we told LiquidSoap where to stream to, we had to use the actual IP of the Docker host. With Docker Compose, our containers all live on the same virtual bridge, and we get free DNS for connecting them together. From the container, the name icecast will resolved to the internal bridge IP address of the icecast containers. This means we can improve our compose recipe:

---

version: '3'

services:

icecast:

image: filefrog/icecast2:latest

ports:

- '8000:8000'

environment:

ICECAST2_PASSWORD: whatever-you-want-it-to-be

source:

image: filefrog/liquidsoap:latest

command:

- |

output.icecast(%opus,

host = "icecast",

port = 8000,

password = "whatever-you-want-it-to-be",

mount = "pirate-radio.opus",

playlist.safe(reload=120,"/radio/playlist.m3u"))

volumes:

- $PWD/radio:/radioThis final version of our recipe is completely portable; you don’t have to adhere to my personal network numbering scheme to make use of this deployment asset – just download it and compose it up!

Before I let you go, we should verify that everything is still working. The Icecast web interface should still load, and you should be able to listen to those two goofballs on Rent / Buy / Build (the podcast) talk about how to source the components of your Cloud-Native platform.

Enjoy!