What happens when bosh reports that a disk doesn’t exist that clearly does? This is an issue that we encountered using vSphere and the troubleshooting was a little interesting.

The symptoms

We have a client with a rather large deployment in vSphere. While building the pipeline, we noticed that occasionally a microbosh deployment would fail and we’d have to perform "surgery" on the bosh-deployments.yml file. What we’d see is bosh micro deploy failing like this:

...

Stemcell info

-------------

Name: bosh-vsphere-esxi-ubuntu-trusty-go_agent

Version: 3087

Will deploy due to configuration changes

Started prepare for update

Started prepare for update > Stopping agent services. Done (00:00:01)

Started prepare for update > Unmount disk. Done (00:00:01)

Started prepare for update > Detach diskat depth 0 - 20: unable to get local issuer certificate

/var/lib/gems/1.9.1/gems/bosh_vsphere_cpi-2.0.0/lib/cloud/vsphere/disk_provider.rb:52:in `find': Could not find disk with id 'disk-e5b812c1-6e20-4365-aba1-99e24f82b889' (Bosh::Clouds::DiskNotFound)

from /var/lib/gems/1.9.1/gems/bosh_vsphere_cpi-2.0.0/lib/cloud/vsphere/cloud.rb:321:in `block in detach_disk'

from /var/lib/gems/1.9.1/gems/bosh_common-1.3072.0/lib/common/thread_formatter.rb:49:in `with_thread_name'

from /var/lib/gems/1.9.1/gems/bosh_vsphere_cpi-2.0.0/lib/cloud/vsphere/cloud.rb:319:in `detach_disk'

from /var/lib/gems/1.9.1/gems/bosh_cpi-1.3072.0/lib/cloud/internal_cpi.rb:22:in `invoke_cpi_method'

from /var/lib/gems/1.9.1/gems/bosh_cpi-1.3072.0/lib/cloud/internal_cpi.rb:10:in `method_missing'

from /var/lib/gems/1.9.1/gems/bosh_cli_plugin_micro-1.3072.0/lib/bosh/deployer/instance_manager.rb:327:in `block in detach_disk'

from /var/lib/gems/1.9.1/gems/bosh_cli_plugin_micro-1.3072.0/lib/bosh/deployer/instance_manager.rb:85:in `step'

from /var/lib/gems/1.9.1/gems/bosh_cli_plugin_micro-1.3072.0/lib/bosh/deployer/instance_manager.rb:326:in `detach_disk'

from /var/lib/gems/1.9.1/gems/bosh_cli_plugin_micro-1.3072.0/lib/bosh/deployer/instance_manager.rb:190:in `update'

from /var/lib/gems/1.9.1/gems/bosh_cli_plugin_micro-1.3072.0/lib/bosh/deployer/instance_manager.rb:102:in `block in update_deployment'

from /var/lib/gems/1.9.1/gems/bosh_cli_plugin_micro-1.3072.0/lib/bosh/deployer/instance_manager.rb:92:in `with_lifecycle'

from /var/lib/gems/1.9.1/gems/bosh_cli_plugin_micro-1.3072.0/lib/bosh/deployer/instance_manager.rb:102:in `update_deployment'

from /var/lib/gems/1.9.1/gems/bosh_cli_plugin_micro-1.3072.0/lib/bosh/cli/commands/micro.rb:179:in `perform'

from /var/lib/gems/1.9.1/gems/bosh_cli-1.3072.0/lib/cli/command_handler.rb:57:in `run'

from /var/lib/gems/1.9.1/gems/bosh_cli-1.3072.0/lib/cli/runner.rb:56:in `run'

from /var/lib/gems/1.9.1/gems/bosh_cli-1.3072.0/bin/bosh:19:in `<top (required)>'

from /usr/local/bin/bosh:23:in `load'

from /usr/local/bin/bosh:23:in `<main>'

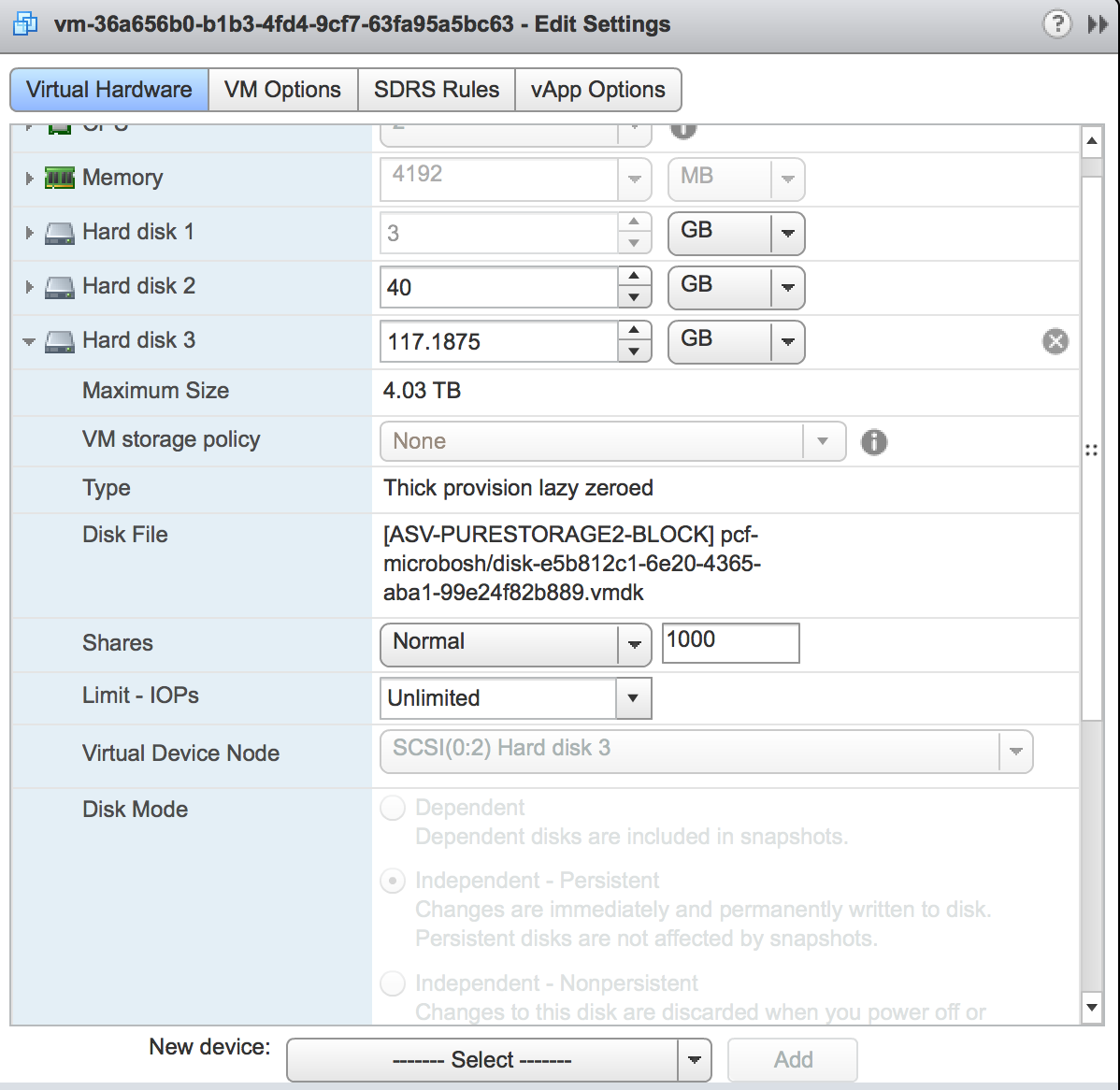

Looking at vSphere, the disk did in fact exist:

Not only did it exist, it belongs to the VM CID that is in the bosh-deployments.yml file:

---

instances:

- :id: 1

:name: microbosh

:uuid: {{UUID}}

:stemcell_cid: {{SCID}}

:stemcell_sha1: {{SSHA}}

:stemcell_name: bosh-stemcell-3087-vsphere-esxi-ubuntu-trusty-go_agent

:config_sha1: {{CONF}}

:vm_cid: vm-36a656b0-b1b3-4fd4-9cf7-63fa95a5bc63

:disk_cid: disk-e5b812c1-6e20-4365-aba1-99e24f82b889

What did the log say?

The log was a little less than helpful, as the deployment seemed to go through the normal motions the deploy until encountering an error such at this one:

...

I, [2015-09-28T14:16:35.558692 #28566] [attach_disk(vm-36a656b0-b1b3-4fd4-9cf7-63fa95a5bc63, disk-e5b812c1-6e20-4365-aba1-99e24f82b889)] INFO -- : Attaching disk

I, [2015-09-28T14:16:37.683131 #28566] [attach_disk(vm-36a656b0-b1b3-4fd4-9cf7-63fa95a5bc63, disk-e5b812c1-6e20-4365-aba1-99e24f82b889)] INFO -- : Finished attaching disk

I, [2015-09-28T14:17:10.390309 #28566] [0x783ffc] INFO -- : Director is ready:

...

E, [2015-09-28T15:16:26.775114 #29900] [0xcf5800] ERROR -- : not unmounting disk-e5b812c1-6e20-4365-aba1-99e24f82b889 as it doesn't belong to me: []

I, [2015-09-28T15:16:28.919513 #29900] [detach_disk(vm-36a656b0-b1b3-4fd4-9cf7-63fa95a5bc63, disk-e5b812c1-6e20-4365-aba1-99e24f82b889)] INFO -- : Detaching disk: disk-e5b812c1-6e20-4365-aba1-99e24f82b889 from vm: vm-36a656b0-b1b3-4fd4-9cf7-63fa95a5bc63

...

So basically the disk would successfully attach to the VM, but then when it came time to detach the VM would respond with "it isn’t mine!". I feel like there’s a bad joke in here, but I digress.

So what happened?

While we continued to investigate we started to note that some of the INFO statuses did not make sense. For example, we saw this VM being created:

...

INFO -- : Setting VM env: {"vm"=>{"name"=>"vm-7c516a12-8ad5-4033-8a92-ff35030e3a2d" ...

while the VM CID in the bosh-deployments.yml file was vm-700f482f-36cf-4c86-95c5-c86a18499c4a. This caused us to investigate a little further.

What happened

Essentially, what appears to have happened is that somehow the original deployment wasn’t saved correctly or at all in bosh-deployments.yml (at this point it is hard to say). Then for every subsequent redeploy it would try to create a new microbosh with the same specifications: including the same IP address. Since vSphere allows multiple VMs to have the same IP without warning, by the time we caught this issue there were over a dozen VMs in vSphere with the same IP address. This collision is what caused confusion when creating/destroying the VMs as part of the normal deployment process, which would then fail.

Since we are deploying the microboshes via a pipeline, and thus whenever a microbosh deploy fails for any reason we see this issue anew, we are adding a script to our vSphere pipeline to basically detect if an IP is in use and if it is in use only by the VM specified by bosh-deployments.yml.